Cluster convolution for GATE: Difference between revisions

From pCT

No edit summary |

Added code to take edep arguments |

||

| (One intermediate revision by the same user not shown) | |||

| Line 1: | Line 1: | ||

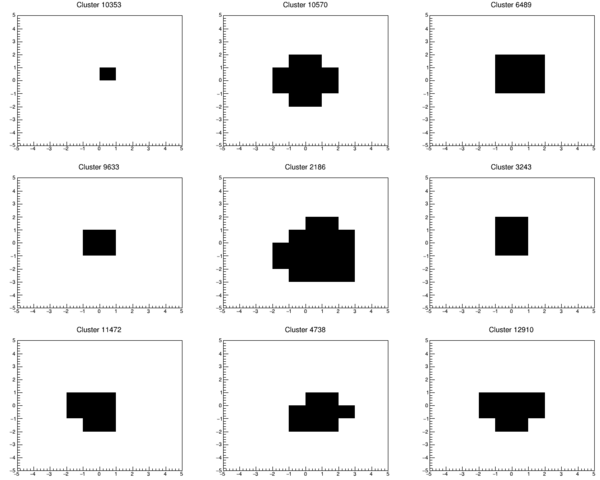

In her MSc project, Silje Grimstad made a filtered library of the clusters from the summer beamtest in Heidelberg. | In her MSc project, Silje Grimstad made a filtered library of the clusters from the summer beamtest in Heidelberg. | ||

The result is ~15k Helium clusters stored as | |||

The result is ~15k Helium clusters stored as x/y arrays in a [[:File:database_final.zip|ROOT file included here]]. | |||

{| class="wikitable" | {| class="wikitable" | ||

| Line 36: | Line 37: | ||

[[File:original_vs_convoluted_hitmap.PNG|1000px]] | [[File:original_vs_convoluted_hitmap.PNG|1000px]] | ||

Below is example code to perform the diffusion on-fly using a Hits container class with Hit objects (x,y,layer,edep,eventID). | |||

For more information about the implementation see [https://github.com/HelgeEgil/focal GitHub]. | |||

<source lang="cpp"> | <source lang="cpp"> | ||

| Line 47: | Line 49: | ||

using namespace std; | using namespace std; | ||

void | void makeSortedClusterDatabase(); | ||

Hits * diffuseHits(TRandom3 * gRandom, Hits * singlePixelHits); | |||

TTree * CDB_treeCluster; | |||

list<Int_t> * CDB_hit_array = 0; | |||

TBranch * CDB_b_hit_array; | |||

TTreeIndex * CDB_index; | |||

Int_t CDB_clusterSize; | |||

Double_t CDB_x_mean, CDB_y_mean; | |||

Int_t CDB_sortIndex[50]; | |||

TFile * CDB_fCluster; | |||

void makeSortedClusterDatabase() { | |||

// From GlobalConstants/MaterialConstants.C / .h | |||

Int_t lastClusterSize = -1; | |||

CDB_fCluster = new TFile("Data/ClusterSizes/ALPIDE/database_final.root", "READ"); | |||

CDB_treeCluster = (TTree*) CDB_fCluster->Get("database"); | |||

CDB_treeCluster->SetBranchAddress("size", &CDB_clusterSize); | |||

CDB_treeCluster->SetBranchAddress("x_mean", &CDB_x_mean); | |||

CDB_treeCluster->SetBranchAddress("y_mean", &CDB_y_mean); | |||

CDB_treeCluster->SetBranchAddress("hit_array", &CDB_hit_array, &CDB_b_hit_array); | |||

// Sort clusters based on cluster size | |||

for (int i=0; i<50; i++) CDB_sortIndex[i] = -1; | |||

CDB_treeCluster->BuildIndex("size"); | |||

CDB_index = (TTreeIndex*) CDB_treeCluster->GetTreeIndex(); | |||

for (int i=0; i<CDB_index->GetN()-1; i++) { | |||

Long64_t local = CDB_treeCluster->LoadTree(CDB_index->GetIndex()[i]); | |||

CDB_treeCluster->GetEntry(local); | |||

if (lastClusterSize < 0 || lastClusterSize != CDB_clusterSize) { | |||

CDB_sortIndex[CDB_clusterSize] = i; | |||

lastClusterSize = CDB_clusterSize; | |||

} | |||

} | |||

} | |||

Hits * diffuseHits(TRandom3 *gRandom, Hits * singlePixelHits) { | |||

// From HelperTools/getTracks.C | |||

Int_t nHits = singlePixelHits->GetEntriesFast(); | |||

Hits * diffusedHits = new Hits(); | |||

Int_t x, y, outX, outY, layer, cs, eventID, idx_x, binPos; | |||

Float_t edep; | |||

Int_t randomClusterIdx; | |||

for (Int_t h=0; h<nHits; h++) { | |||

x = singlePixelHits->getX(h); | |||

y = singlePixelHits->getY(h); | |||

layer = singlePixelHits->getLayer(h); | |||

edep = singlePixelHits->getEdep(h); | |||

eventID = singlePixelHits->getEventID(h); | |||

// The correspondence between CS and Edep [keV/um] is calculated from Ganesh's NIM A Proceedings article. | |||

cs = 4.2267 * pow(edep, 0.6500) + 0.5; | |||

// To keep any outliers within the tabulated clusters in the database | |||

if (cs<2) cs=2; | |||

if (cs>=27) cs=26; | |||

randomClusterIdx = | randomClusterIdx = gRandom->Integer(CDB_sortIndex[cs+1] - CDB_sortIndex[cs]) + CDB_sortIndex[cs]; | ||

CDB_treeCluster->GetEntry(CDB_treeCluster->LoadTree(CDB_index->GetIndex()[randomClusterIdx])); | |||

idx_x = 0; | idx_x = 0; | ||

Int_t thisCS = 0; | |||

for (Int_t n : *CDB_hit_array) { | |||

for (Int_t binPosPow = 0; binPosPow < 10; binPosPow++) { | |||

binPos = pow(2, binPosPow); | |||

if (binPos & n) { | |||

outX = x + (idx_x - CDB_x_mean) + 0.5; | |||

outY = y + (binPosPow - CDB_y_mean) + 0.5; | |||

diffusedHits->appendPoint(outX, outY, layer, eventID, edep/CDB_clusterSize); | |||

} | } | ||

} | } | ||

idx_x++; | |||

} | } | ||

} | } | ||

return diffusedHits; | |||

} | |||

</source> | </source> | ||

Latest revision as of 11:56, 1 April 2019

In her MSc project, Silje Grimstad made a filtered library of the clusters from the summer beamtest in Heidelberg.

The result is ~15k Helium clusters stored as x/y arrays in a ROOT file included here.

| x_mean | y_mean | size | hit_array | height | width | x_start | y_start | i_event | combined |

|---|---|---|---|---|---|---|---|---|---|

| float | float | int | list<int> | int | int | int | int | int | bool |

A random sample of 9 clusters is shown below:

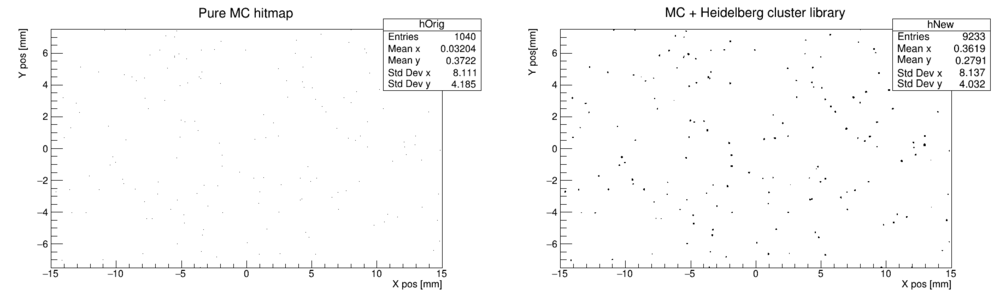

We want to convolute a GATE simulation with clusters from this library. For each hit in the MC data, we pick a random cluster from the library and draw it around the hit position. To do this, use the code at the bottom, with the following result:

Below is example code to perform the diffusion on-fly using a Hits container class with Hit objects (x,y,layer,edep,eventID). For more information about the implementation see GitHub.

#include <TTree.h>

#include <TFile.h>

#include <TRandom3.h>

#include <list>

#include <vector>

using namespace std;

void makeSortedClusterDatabase();

Hits * diffuseHits(TRandom3 * gRandom, Hits * singlePixelHits);

TTree * CDB_treeCluster;

list<Int_t> * CDB_hit_array = 0;

TBranch * CDB_b_hit_array;

TTreeIndex * CDB_index;

Int_t CDB_clusterSize;

Double_t CDB_x_mean, CDB_y_mean;

Int_t CDB_sortIndex[50];

TFile * CDB_fCluster;

void makeSortedClusterDatabase() {

// From GlobalConstants/MaterialConstants.C / .h

Int_t lastClusterSize = -1;

CDB_fCluster = new TFile("Data/ClusterSizes/ALPIDE/database_final.root", "READ");

CDB_treeCluster = (TTree*) CDB_fCluster->Get("database");

CDB_treeCluster->SetBranchAddress("size", &CDB_clusterSize);

CDB_treeCluster->SetBranchAddress("x_mean", &CDB_x_mean);

CDB_treeCluster->SetBranchAddress("y_mean", &CDB_y_mean);

CDB_treeCluster->SetBranchAddress("hit_array", &CDB_hit_array, &CDB_b_hit_array);

// Sort clusters based on cluster size

for (int i=0; i<50; i++) CDB_sortIndex[i] = -1;

CDB_treeCluster->BuildIndex("size");

CDB_index = (TTreeIndex*) CDB_treeCluster->GetTreeIndex();

for (int i=0; i<CDB_index->GetN()-1; i++) {

Long64_t local = CDB_treeCluster->LoadTree(CDB_index->GetIndex()[i]);

CDB_treeCluster->GetEntry(local);

if (lastClusterSize < 0 || lastClusterSize != CDB_clusterSize) {

CDB_sortIndex[CDB_clusterSize] = i;

lastClusterSize = CDB_clusterSize;

}

}

}

Hits * diffuseHits(TRandom3 *gRandom, Hits * singlePixelHits) {

// From HelperTools/getTracks.C

Int_t nHits = singlePixelHits->GetEntriesFast();

Hits * diffusedHits = new Hits();

Int_t x, y, outX, outY, layer, cs, eventID, idx_x, binPos;

Float_t edep;

Int_t randomClusterIdx;

for (Int_t h=0; h<nHits; h++) {

x = singlePixelHits->getX(h);

y = singlePixelHits->getY(h);

layer = singlePixelHits->getLayer(h);

edep = singlePixelHits->getEdep(h);

eventID = singlePixelHits->getEventID(h);

// The correspondence between CS and Edep [keV/um] is calculated from Ganesh's NIM A Proceedings article.

cs = 4.2267 * pow(edep, 0.6500) + 0.5;

// To keep any outliers within the tabulated clusters in the database

if (cs<2) cs=2;

if (cs>=27) cs=26;

randomClusterIdx = gRandom->Integer(CDB_sortIndex[cs+1] - CDB_sortIndex[cs]) + CDB_sortIndex[cs];

CDB_treeCluster->GetEntry(CDB_treeCluster->LoadTree(CDB_index->GetIndex()[randomClusterIdx]));

idx_x = 0;

Int_t thisCS = 0;

for (Int_t n : *CDB_hit_array) {

for (Int_t binPosPow = 0; binPosPow < 10; binPosPow++) {

binPos = pow(2, binPosPow);

if (binPos & n) {

outX = x + (idx_x - CDB_x_mean) + 0.5;

outY = y + (binPosPow - CDB_y_mean) + 0.5;

diffusedHits->appendPoint(outX, outY, layer, eventID, edep/CDB_clusterSize);

}

}

idx_x++;

}

}

return diffusedHits;

}